In the era of advanced technology, OpenAI has developed a system that utilizes a combination of chat completion model and vector database to enable large language models (LLMs) to answer questions about specific content from personal documents. This innovative approach involves creating a prompt that includes the question and the documents, utilizing text embeddings to find the most relevant documents, and querying the database to retrieve the nearest document vectors. The ultimate goal is to build an intelligent question answering system that can provide useful responses to inquiries about personal documents. This article aims to explore the capabilities of OpenAI’s vector search in extracting valuable information and insights from a diverse range of documents, and the potential for leveraging OpenAI embeddings to search through a large number of PDFs.

Understanding OpenAI’s Chat Completion Model and Vector Database

Overview of OpenAI’s chat completion model and Pinecone’s vector database

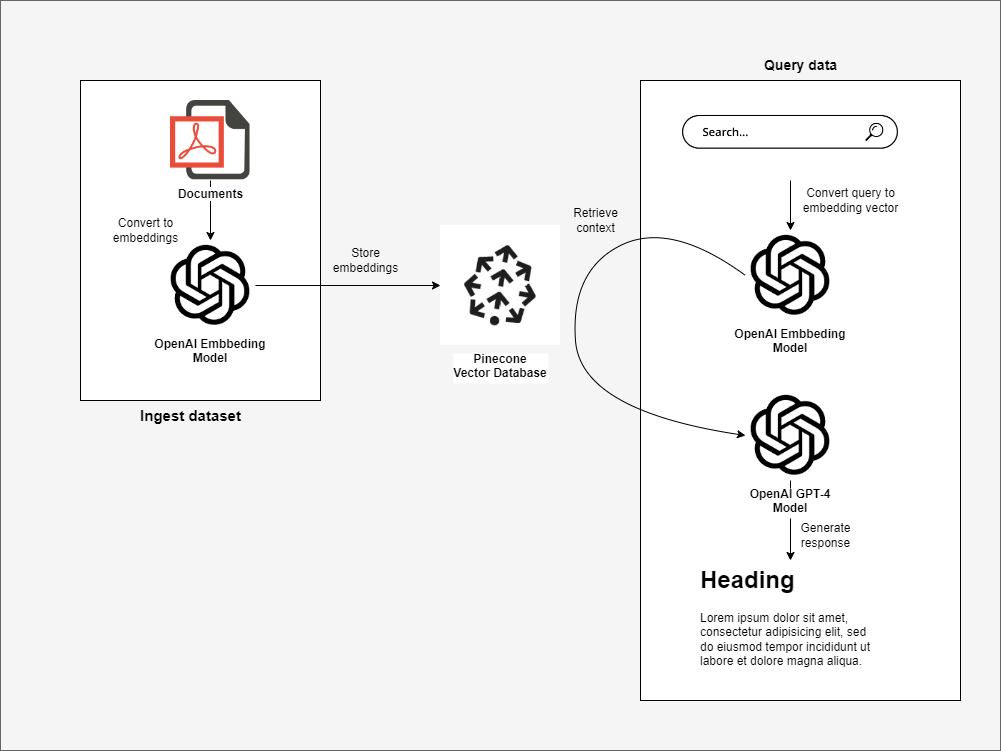

OpenAI’s chat completion model and Pinecone’s vector database work in combination to enable large language models to answer specific questions about personal documents. The system uses the chat completion model to answer questions by creating a prompt that includes the question and the documents, then asking the model to answer the question based on the text contents of these documents. The Pinecone vector database stores and efficiently queries large amounts of vectors, allowing for the retrieval of the nearest document vectors in the database to form a prompt for the chat completion model, ultimately enabling the system to provide useful responses to inquiries about personal documents.

Explanation of how the system enables large language models to answer questions about specific content from personal documents

The implementation of the system involves setting up accounts and API keys for OpenAI and Pinecone, creating a Pinecone index, embedding and storing documents in the Pinecone index, and querying the index to retrieve relevant documents. The system uses OpenAI’s chat completion endpoint to answer questions based on the documents, with the ability to handle different use cases and considerations such as data privacy, limitations of the embedding model, and alternatives to using OpenAI and Pinecone. Overall, the combination of OpenAI’s chat completion model and Pinecone’s vector database provides a powerful solution for building intelligent question-answering systems that can handle specific content from personal documents.

Creating a Prompt for Document-Related Questions

The process of creating a prompt that includes the question and the documents is essential for effectively leveraging OpenAI’s chat completion model to answer questions about a set of documents. By crafting a well-structured prompt, users can ensure that the query is accurately interpreted and that the relevant documents are considered in providing a comprehensive response.

Utilizing text embeddings to find the most relevant documents for the question

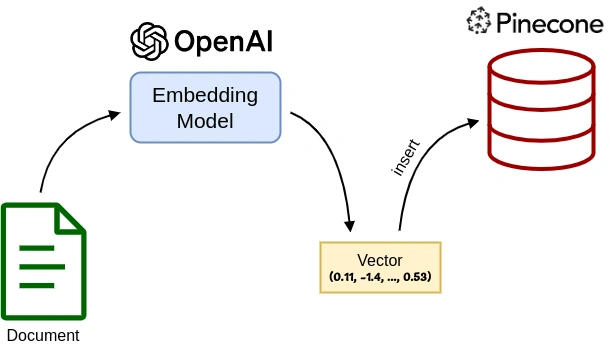

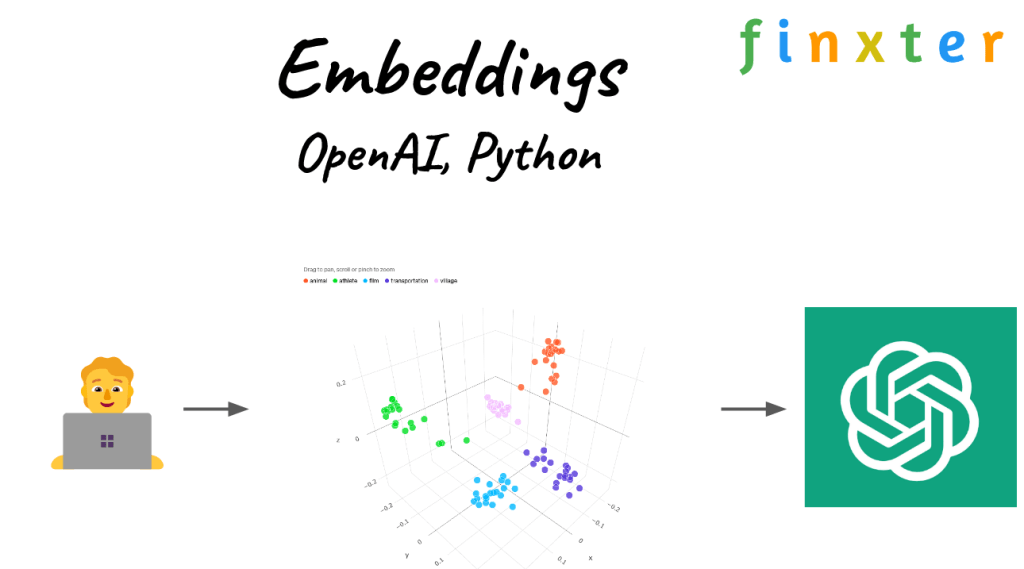

Text embeddings play a crucial role in identifying the most pertinent documents for a given question. These embeddings are high-dimensional numerical vectors that capture the semantic meaning of text, allowing for efficient retrieval of semantically related documents. By leveraging text embeddings, users can pinpoint the most relevant sources to inform the OpenAI chat completion model’s response, enhancing the accuracy and depth of the provided answer.

Implementing OpenAI Chat Completion Model and Pinecone Index

Implementing the OpenAI chat completion model and Pinecone index entails setting up the necessary infrastructure to leverage these tools effectively. This process involves creating a Pinecone index, embedding and storing documents within the index, and querying the index to retrieve the most relevant documents for the given question. By integrating these components, users can seamlessly harness the power of large language models and vector databases to build intelligent question answering systems.

Handling Rate Limits and Document Size Considerations

- Addressing rate limits for token embedding is crucial to ensure the efficient processing of documents within the specified constraints.

- Splitting large documents into smaller chunks for embedding enables users to overcome input length limitations and effectively capture the semantic meaning of extensive sources.

Practical Application and Considerations

The practical application of combining large language models with vector databases offers a user-friendly approach to addressing complex questions from diverse sources. Additionally, considerations for data privacy and alternative vector database services, such as Chroma and Faiss, are essential for those working with sensitive or enterprise data.

Impact and Future Potential

The use of text embeddings and vector search has sparked significant interest within the tech community, with discussions focusing on leveraging this technology for searches and answers from large document sets. Furthermore, the potential for creating educational content, such as lecture plans and tutorials, highlights the transformative impact of text embeddings in knowledge dissemination and accessibility.

Querying the Database for Document Retrieval

Querying the database for document retrieval involves using OpenAI’s chat completion model and Pinecone vector database to find the most relevant documents for a given query.

Explanation of querying the Pinecone index with the embedding vector of the question

When querying the Pinecone index with the embedding vector of the question, the system matches the question’s vector with the stored document vectors to retrieve the most relevant documents.

Retrieving the nearest document vectors in the database

Retrieving the nearest document vectors in the database involves using text embeddings to filter and find the most relevant documents for a given query, ensuring efficient and accurate document retrieval.

Implementation of the Intelligent Question Answering System

Setting up OpenAI and Pinecone for implementation

The first step in implementing an Intelligent Question Answering System is to set up OpenAI and Pinecone. This involves creating accounts on these platforms and obtaining the necessary API keys. Once the accounts are set up, the OpenAI and Pinecone clients are initialized in a Python script to enable communication with their APIs.

Creating a Pinecone index and embedding and storing documents

After setting up the platforms, the next step is to create a Pinecone index programmatically. This index will be used to store and retrieve the documents that will be used for answering questions. The documents are then loaded and embedded using the OpenAI client’s endpoint for embedding. These embedded documents are then stored in the Pinecone index using the upsert method, allowing for efficient storage and retrieval of document embeddings.

Utilizing the OpenAI client to ask the chat completion model to answer the question based on the document

Once the documents are stored in the Pinecone index, the Intelligent Question Answering System utilizes the OpenAI client to ask the chat completion model to answer questions based on the content of these documents. This involves querying the Pinecone index to retrieve the relevant documents and then combining the document content with the question into a prompt for the OpenAI chat completion model. The model then provides the answer to the question based on the document content, allowing for accurate and efficient question-answering capabilities.

Exploring OpenAI’s Vector Search Capabilities

The potential for leveraging OpenAI’s vector search capabilities to extract insights from a diverse range of documents is a topic of growing interest. The technology offers a powerful tool for answering complex questions from a large set of documents, including recent SCOTUS decisions and other legal documents. This demonstrates the practical applications of OpenAI’s vector search capabilities in real-world scenarios, highlighting its potential to revolutionize knowledge extraction.

Utilizing OpenAI’s Vector Search for Extraction of Insights

OpenAI’s vector search technology allows for the efficient extraction of insights from a wide variety of documents. By using vector embeddings, the system can understand the context and meaning of the documents, enabling it to effectively answer complex questions. The step-by-step implementation in Python, as provided in the data, demonstrates how to set up OpenAI and Pinecone, create a Pinecone index, embed and store documents, and answer questions about the documents using the combined system. This practical approach makes it a valuable resource for developers and data scientists looking to implement intelligent question-answering systems.

Potential for Leveraging OpenAI Embeddings to Search Through PDFs

The use of OpenAI embeddings to search through a large number of PDFs offers significant potential for knowledge extraction. This technology can be applied to extract insights from diverse sources, including legal documents, research papers, and educational materials. The ability to search through PDFs efficiently and accurately using OpenAI’s vector search capabilities opens up new possibilities for researchers, educators, and professionals seeking to gain valuable insights from large volumes of documents.

Privacy Implications and Alternatives

While OpenAI’s vector search capabilities offer powerful solutions for knowledge extraction, it is essential to consider privacy implications when working with sensitive information. The data highlights the importance of data privacy and provides insights into using open source LLMs, hosting a database solution like Chroma, or leveraging Faiss as alternatives to OpenAI and Pinecone. This comprehensive perspective on the considerations and trade-offs involved in choosing the right tools and services for building question-answering systems ensures that privacy and ethical considerations are given due importance.

Examples of Successful Implementation

- The data provides examples of how the system can successfully answer questions about specific content from documents, showcasing the practical implications and benefits of the approach.

- It also explores the potential for incorporating the technology into educational platforms, highlighting its versatility and potential for enhancing learning experiences.

Community Engagement and Future Potential

The data indicates a growing interest in the development and application of OpenAI’s vector search capabilities, with active community engagement and discussions on extracting insights from multiple documents. This demonstrates the potential for further advancements in utilizing OpenAI’s vector search for knowledge extraction, as well as the enthusiasm for incorporating the technology into educational platforms and other practical applications.

conclusion

In conclusion, OpenAI’s chat completion model and Pinecone’s vector database offer a powerful solution for answering questions about a set of documents. By understanding how these systems work in combination, creating effective prompts, and querying the database for document retrieval, users can implement an intelligent question answering system with ease. Leveraging OpenAI’s vector search capabilities further enhances the potential for knowledge extraction and practical application in various fields. As technology continues to advance, the possibilities for utilizing these tools for educational platforms and other practical applications are vast. Overall, the integration of OpenAI and Pinecone provides a promising avenue for efficient and effective document-related question answering.